The Need for Better Terminology in Discussing Existential Risks from AI

Published:

Recently, I listened to a podcast1 from the Future of Life Institute in which Andrew Critch (from the Center for Human Compatible AI at Berkeley) discussed his and David Krueger’s recent paper, “AI Research Considerations for Human Existential Safety (ARCHES)”2. Throughout the episode, I found myself impressed by the clarity and the strength of many of the points Critch made. In particular, I’m thinking about how Critch distinguishes “existential safety” from “safety” more generally, “delegation” from “alignment,” and “prepotent AI” from “generally intelligent AI” or “superintelligent AI” as concepts that can help give us more traction in analyzing the potential existential risks posed by artificial intelligences. So, I decided it would be worthwhile to write this post on one of my key takeaways from the episode: the community working on AI-related existential risks needs to adopt better, more precise terminology.

Existential Safety

One of the first ideas Andrew discussed on the FLI podcast that really caught my attention affirms a distinction between overall “safety” and “existential safety” in AI:

Andrew Critch: Yeah. So here’s a problem we have. And when I say we, I mean people who care about AI existential safety. Around 2015 and 2016, we had this coming out of AI safety as a concept. Thanks to Amodei3 and the Robust and Beneficial AI Agenda from Stuart Russell4, talking about safety became normal. Which was hard to accomplish before 2018. That was a huge accomplishment. [emphasis added] And so what we had happen is people who cared about extinction risk from artificial intelligence would use AI safety as a euphemism for preventing human extinction risk. Now, I’m not sure that was a mistake, because as I said, prior to 2018, it was hard to talk about negative outcomes at all. But it’s at this time in 2020 a real problem that you have people … When they’re thinking existential safety, they’re saying safety, they’re saying AI safety. And that leads to sentences like, “Well, self driving car navigation is not really AI safety.” I’ve heard that uttered many times by different people.

Lucas Perry: And that’s really confusing.

Andrew Critch: Right. And it’s like, “Well, what is AI safety, exactly, if cars driven by AI, not crashing, doesn’t count as AI safety?” I think that as described, the concept of safety usually means minimizing acute risks. Acute meaning in space and time. Like there’s a thing that happens in a place that causes a bad thing. And you’re trying to stop that. And the Concrete Problems in AI Safety agenda really nailed that concept. And we need to get past the concept of AI safety in general if what we want to talk about is societal scale risk, including existential risk. Which is acute on a geological time scale. Like you can look at a century before and after and see the earth is very different. But a lot of ways you can destroy the earth don’t happen like a car accident. They play out over a course of years. And things to prevent that sort of thing are often called ethics. Ethics are principles for getting a lot of agents to work together and not mess things up for each other. And I think there’s a lot of work today that falls under the heading of AI ethics that are really necessary to make sure that AI technology aggregated across the earth, across many industries and systems and services, will not result collectively in somehow destroying humanity, our environment, our minds, et cetera. To me, existential safety is a problem for humanity on an existential timescale that has elements that resemble safety in terms of being acute on a geological timescale. But also resemble ethics in terms of having a lot of agents, a lot of different stakeholders and objectives mulling around and potentially interfering with each other and interacting in complicated ways.1

This immediately resonated with me. As someone who likes to think a lot about AI-related existential risks (x-risks), it can sometimes be hard to meaningfully communicate my interests to others, even within the fields of artificial intelligence and machine learning. I admit that I have been guilty in the past of failing to properly understand or make this distinction between safety and existential safety5. Understanding how to build robust, beneficial, and safe AI systems (in the senses illustrated by the “Concrete Problems” paper and the Robust and Beneficial AI agenda) is and should continue to be a priority, but not necessarily all of the considerations in this area will directly apply to guaranteeing existential safety with regard to artificial intelligence, nor will all potential existential risks be addressed by solutions to more narrow problems in present-day AI safety. It’s entirely possible that we figure out how to design autonomous vehicles that operate safely (and solve most or all other similar safety problems) and still go extinct from unanticipated externalities brought about by AI systems interacting in the aggregate. In the ARCHES paper, Critch and Krueger summarize the relationship between existential safety and safety for present-day AI systems as follows:

- Deployments of present-day AI technologies do not present existential risks.

- Present-day AI developments present safety issues, which, if solved, could be relevant to existential safety.

- Present-day AI developments present non-safety issues which could later become relevant to existential safety.2

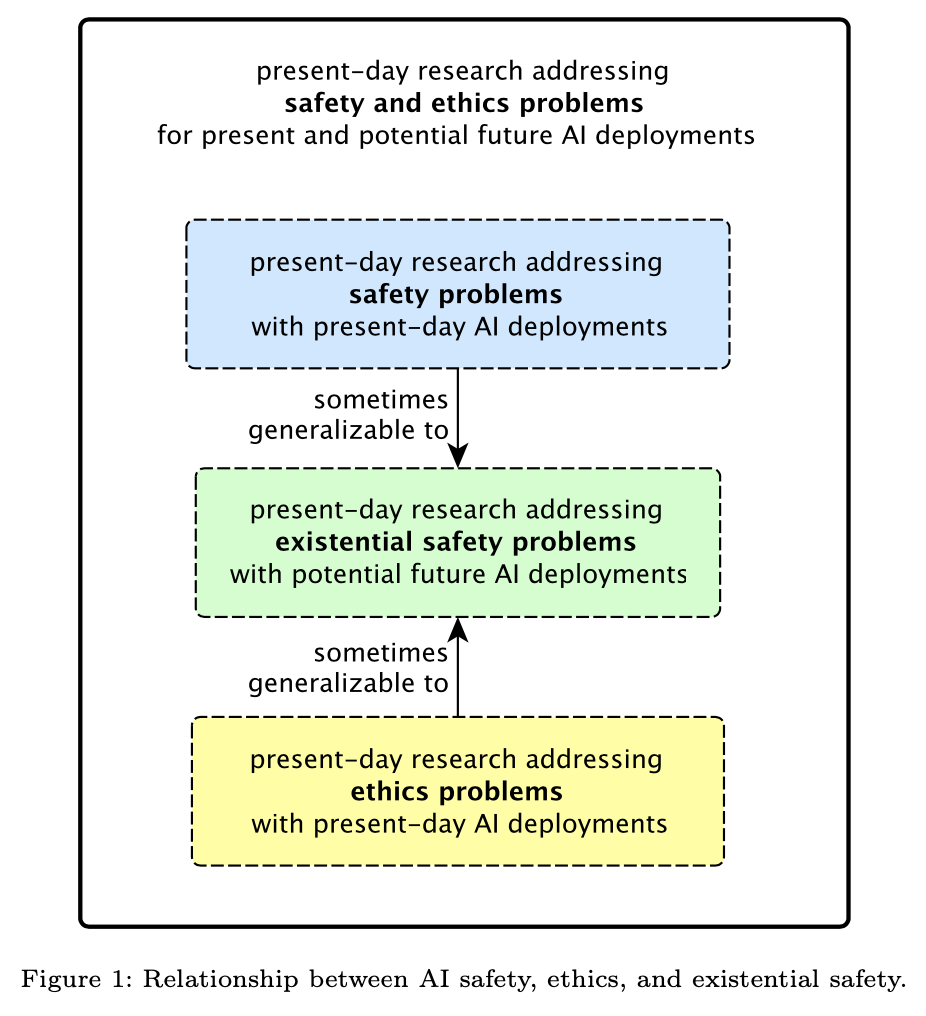

They also provide this diagram which helpfully illustrates these points:

When asked about this relationship between existential safety and “normal” safety on the podcast, Critch had the following to say:

Andrew Critch: The way I think of it, it’s a bit of a three node diagram. There’s present day AI safety problems, which I believe feed into existential safety problems somewhat. Meaning that some of the present day solutions will generalize to the existential safety problems. There’s also present day AI ethics problems, which I think also feed into understanding how a bunch of agents can delegate to each other and treat each other well in ways that are not going to add up to destructive outcomes. That also feeds into existential safety. And just to give concrete examples, let’s take car doesn’t crash, right? What does that have in common with existential safety? Well, existential safety is humanity doesn’t crash. There’s a state space. Some of the states involve humanity exists. Some of the states involve humanity doesn’t exist. And we want to stay in the region of state space where humans exist. Mathematically, it’s got something in common with the staying in the region of state space where the car is on the road and not overheating, et cetera, et cetera. It’s a dynamical system. And it’s got some quantities that you want to conserve and there’s conditions or boundaries you want to avoid. It has this property just like culturally, it has the property of acknowledging a negative outcome and trying to avoid it. That’s, to me, the main thing that safety and existential safety have in common, avoiding a negative outcome. So is ethics about avoiding negative outcomes. And I think those both are going to flow into existential safety.1

What counts as “existential safety” and “existential risk”?

In keeping with the desire to be as clear about terminology as possible, Critch further notes a difference between what he means by the terms “existential safety” and “existential risk” (and how he uses these terms in the ARCHES paper) and how Nick Bostrom, who largely popularized the terms, uses them. Critch prefers to use “existential safety” to refer to preserving existence, and he prefers “existential risk” to refer to extinction. However, Bostrom includes risks that are as big as extinction but are not extinction under the term “existential risk”; according to Bostrom, an existential risk is any risk, such that if it should occur, would permanently and drastically curtail the potential for Earth-originating intelligent life6, which would include futures of deep suffering or being inescapably locked into sub-optimal systems, etc.

Critch makes the point that leaving “existential risk” to refer simply to the risk of human extinction has the advantage of being simpler-to-agree-upon: there’s a lot of debate to be had over what futures might or might not be “as bad (or worse) than extinction,” but there’s much less debate to be had about what extinction is. (Although there still might be some! If all humans replaced themselves with digital emulations of their own minds, do those emulations constitute “humans”? If not, would such an event represent “human extinction”? Regardless, the point remains that extinction is a much more clear-cut concept than “all futures as bad as or worse than human extinction”.) In a field like computer science, where people tend to especially care about making concepts and terms precise, this represents an advantage in terms of being able to further discourse.

Instead, Critch mentions the term “societal-scale risks” to capture all of these other undesirable possibilities, which I will adopt when discussing these other undesirable outcomes that do not result in the extinction of the human species (for lack of a better, established term by the community). Andrew also mentions, and I agree, that this is still a good category of risk to think about, and that he doesn’t want people working on those problems to be fractured by the question of whether those outcomes are “worse than extinction” or count as an “existential risk.”

The Shortcomings of “Alignment”

Perhaps the most surprising point that Andrew raised in his interview (for me, at least) was how the whole concept of “alignment” in AI is somewhat inadequate for addressing the issue of existential risk. To illustrate how this is the case, he points to pollution and the problem of externalities more generally: nobody wants everyone else to pollute the atmosphere, but they’re willing to do it themselves, by driving cars to get to work on time, taking flights to visit family members or conduct business, etc. The individuals accrue the benefits of their actions, but the costs associated with these actions (which actually outweigh the benefits) get spread out across everybody else.

The same case can be made regarding the role of the alignment problem with respect to existential safety: it’s entirely possible for humanity to go extinct as a result of the aggregate behavior of interactions between individually-aligned systems, because they weren’t designed to interact well enough, or because they had different stakeholders, or some similarly-imaginable situation. As typically discussed, the alignment problem is roughly that of aligning what a single AI system tries to do with the values and interests of a single human. As I understand it, this conception (of the AI trying to do what its user wants) is roughly Paul Christiano’s notion of intent alignment 7. But the rollout of advanced AI technologies will nearly inevitably be massively multipolar, with lots of stakeholders involved, and it is exactly this type of situation in which externalities arise. Andrew believes that existential risk is more likely to occur from these externalities than from a failure to align individual systems. In addition, beyond there existing this problem with externalities that won’t be addressed by work on the alignment problem, some work on alignment may not be directly helpful towards solving the problem of existential risk, so if your primary goal is to avoid existential risks from AI, it’s not immediately clear that working on alignment is the most direct or fruitful path to that end.

This was pretty surprising to me because prior to hearing these arguments, my implicitly-held belief was that a solution to the alignment problem would prevent AI-related existential catastrophe, even though I never explicitly questioned this link between alignment and existential safety (and, if someone had questioned me on this issue specifically, I might have realized it was a shaky assumption). Further, I’m not sure I was even equipped to frame such a question properly without having in mind the clear distinction between safety and existential safety raised here by Critch. However, once presented with this argument, I was pretty quickly able to see its potential validity. I’m not sure how widely-held this erroneous belief is within the communities of people working on AI-related existential risks and the alignment problem, but I’m inclined to believe that they’re relatively widespread, since I’ve never seen this issue raised before in anything that I’ve read on the AI Alignment Forum, for example. Perhaps people working on aligning single AI systems with single users do know that we’ll have to understand much more complicated dynamics of systems with multiple AIs and multiple humans, and they just figure we have to solve the single/single case first before we can fully understand the multi/multi case. (See the below section on multi/multi preparedness for more discussion to this point.) This may very well be true, but I’ve never seen this caveat explicitly raised in any discussion of the alignment problem.

Furthermore, it’s unclear how the concept of alignment even generalizes to cases where there are multiple users and/or multiple AI systems. As Critch notes in the interview,

We’re trying to align the AI technology with the human values. So, you go from single/single to single/multi. Okay. Now we have multiple AI systems serving a single human, that’s tricky. We got to get the AI systems to cooperate. Okay. Cool. We’ll figure out how the cooperation works and we’ll get the AI systems to do that. Cool. Now we’ve got a fleet of machines that are all serving effectively. Okay. Now let’s go to multi-human, multi-AI. You’ve got lots of people, lots of AI systems in this hyper interactive relationship. Did we align the AIs with the humans? Well, I don’t know. Are some of the humans getting really poor, really fast, while some of them are getting really rich, really fast? Sound familiar? Okay. Is that aligned? Well, I don’t know. It’s aligned for some of them. Okay. Now we have a big debate. I think that’s a very important debate and I don’t want to skirt it. However, I think you can ask the question “did the AI technology lead to human extinction?” without having that debate.1

Nonetheless, it remains clear that there is still some relationship between the concept of alignment and how we might begin to consider and address existential safety overall. This connection is nicely summarized in ARCHES:

The AI alignment problem may be viewed as the first and simplest prerequisite for safely integrating highly intelligent AI systems into human society. If we cannot solve this problem, then more complex interactions between multiple humans and/or AI systems are highly unlikely to pan out well. On the other hand, if we do solve this problem, then solutions to manage the interaction effects between multiple humans and AI systems may be needed in short order.2

(This need for solutions to manage the interaction effects between multiple humans and AI systems shortly after solving the alignment problem is covered more specifically below under “The Multiplicity Thesis and Multi/multi Preparedness.”)

Delegation

So, if the concept of alignment is inadequate for addressing existential risks, insofar as its solution does not guarantee existential safety, where are we left in trying to meaningfully discuss approaches to avoiding AI-associated x-risks? In ARCHES, Critch and Krueger do so by viewing the relationship between humans and AI as one of delegation: “when some humans want something done, those humans can delegate responsibility for the task to one or more AI systems.”2 The concept is useful in beginning to address the additional layers of complexity produced by multiple humans and/or AIs:

- Single(-human)/single(-AI system) delegation means delegation from a single human stakeholder to a single AI system (to pursue one or more objectives).

- Single/multi delegation means delegation from a single human stakeholder to multiple AI systems.

- Multi/single delegation means delegation from multiple human stakeholders to a single AI system.

- Multi/multi delegation means delegation from multiple human stakeholders to multiple AI systems.2

Notice that under this classification, single-single delegation essentially is the alignment problem. That is, while it’s unclear how to generalize the concept of alignment to situations with multiple stakeholders, it’s much clearer how we can frame alignment as a specific case of the broader concept of delegation. ARCHES makes this relation more explicit:

Consider the question: how can one build a single intelligent AI system to robustly serve the many goals and interests of a single human? Numerous other authors have considered this problem before, under the name “alignment”….

(Despite the current use of the term “alignment” for this existing research area, this report is instead organized around the concept of delegation, because its meaning generalizes more naturally to the multi-stakeholder scenarios to be considered later on. That is, while it might be at least somewhat clear what it means for a single, operationally distinct AI system to be “aligned” with a single human stakeholder, it is considerably less clear what it should mean to be aligned with multiple stakeholders. It is also somewhat unclear whether the “alignment” of a set of multiple AI systems should mean that each system is aligned with its stakeholder(s) or that the aggregate/composite system is aligned.)2

Control, Instruction, Comprehension

ARCHES then delineates three human capabilities as being “integral to successful human/AI delegation: comprehension, instruction, and control”. These capabilities are defined in the report as:

- Comprehension: Human/AI comprehension refers to the human ability to understand how an AI system works and what it will do.

- Instruction: Human/AI instruction refers to the human ability to convey instructions to an AI system regarding what it should do.

- Control: Human/AI control refers to the human ability to retain or regain control of a situation involving an AI system, especially in cases where the human is unable to successfully comprehend or instruct the AI system via the normal means intended by the system’s designers.2

The authors take care to note that maintaining focus on human capabilities “serves to avoid real and apparent dependencies of arguments upon viewing AI systems as ‘agents’, and also draws attention to humans as responsible and accountable for the systems to which they delegate tasks and responsibilities.”2

These capabilities can also be combined with the above classification of types of delegation, so that we can talk about single/single comprehension, multi/multi control, and so on. The authors use this overall classification scheme to structure both their later discussion of research directions and their analysis of how progress in these different areas of research flows through to affect progress in the other areas. These parts of the report are extremely interesting and worth reading, but beyond the scope of this article’s discussion.

The Multiplicity Thesis and Multi/multi Preparedness

It seems highly likely that soon after we figure out single/single delegation, we will be dealing with a situation with multiple human stakeholders and/or multiple AI systems. This is what the authors refer to as the multiplicity thesis:

The multiplicity thesis. Soon after the development of methods enabling a single human stakeholder to effectively delegate to a single powerful AI system, incentives will likely exist for additional stakeholders to acquire and share control of the system (yielding a multiplicity of engaging human stakeholders) and/or for the system’s creators or other institutions to replicate the system’s capabilities (yielding a multiplicity of AI systems).2

The additional complexity in maintaining existential safety in systems with interactions between multiple humans and/or multiple AI systems, in addition to the fact that this complexity might not be well-addressed by research on single/single delegation, raises the question of how important it is to prepare for this complexity before it arises. It’s possible that work on single/single delegation builds naturally to solutions for the other types of delegation, but because the multiplicity thesis would imply that we would need these other solutions soon after the discovery of effective solutions to single/single delegation, it seems prudent to consider problems in single/multi, multi/single, and multi/multi delegation in parallel to work being done in single/single alignment. This value judgment is described as “multi/multi preparedness” in ARCHES:

Multi/multi preparedness. From the perspective of existential safety in particular and societal stability in general, it is wise to think in technical detail about the challenges that multi/multi AI delegation might eventually present for human society, and what solutions might exist for those challenges, before the world would enter a socially or geopolitically unstable state in need of those solutions.2

As Andrew elaborates in the podcast,

It’s because the researchers who care about existential safety want to understand what I would call a single/single delegation, but what they would call the method of single/single alignment, as a building block for what will be built next. But I sort of think different. I think that’s a great reasonable position to have. I think differently than that because I think the day that we have super powerful single/single alignment solutions is the day that it leaves the laboratory and rolls out into the economy. Like if you have very powerful AI systems that you can’t single/single align, you can’t ship a product because you can’t get it to do what anybody wants.

So I sort of think single/single alignment solutions sort of shorten the timeline. It’s like deja vu. When everyone was working on AI capabilities, the alignment people are saying, “Hey, we’re going to run out of time to figure out alignment. You’re going to have all of these capabilities and we’re not going to know how to align them. So let’s start thinking ahead about alignment.” I’m saying the same thing about alignment now. I’m saying once you get single/single alignment solutions, now your AI tech is leaving the lab and going into the economy because you can sell it. And now, you’ve run out of time to have solved the multipolar scenario problem. So I think there’s a bit of a rush to figure out the multi-stakeholder stuff before the single/single stuff gets all figured out.1

Andrew also believes that the problems in situations involving multiple human stakeholders and/or multiple AI systems are more likely to remain unsolved by economic pressures. He argues that at some point, solving the single/single delegation problem will become profitable because “if you have very powerful AI systems that you can’t single-single align, you can’t ship a product because you can’t get it to do what anybody wants.”1 But it’s less clear that the other problems will be solved the same way, so this might make a difference for people deciding which research directions to follow if their goal is for their work to help decrease the probability of existential risk by the greatest extent possible.

Prepotence

The third important new concept introduced by ARCHES that I want to highlight is prepotence. An AI system or technology is said to be prepotent “if its deployment would transform the state of humanity’s habitat—currently the Earth—in a manner that is at least as impactful as humanity and unstoppable to humanity.”2 More specifically, “at least as impactful as humanity” is taken to mean that “if the AI system or technology is deployed, then its resulting transformative effects on the world would be at least as significant as humanity’s transformation of the Earth thus far, including past events like the agricultural and industrial revolutions,” and “unstoppable to humanity” means “if the AI system or technology is deployed, then no concurrently existing collective of humans would have the ability to reverse or stop the transformative impact of the technology (even if every human in the collective were suddenly in unanimous agreement that the transformation should be reversed or stopped)”.2

This two-part condition provides a useful link between the concept of prepotence and the Open Philanthropy Project’s concept of transformative AI8. Transformative AI systems are those that correspond to the first part of the above definition; that is, “prepotent AI systems/technologies are transformative AI systems/technologies that are also unstoppable to humanity after their deployment”2.

Importantly, superintelligence is not a necessary property of a prepotent system or technology. The authors mention three classes of capabilities that could enable prepotence: technological autonomy, replication speed, and social acumen2. None of these capabilities require superintelligence in principle.

However, not all of the competencies stipulated in the definition of superintelligence are necessary for an AI technology to pose a significant existential risk. Although Bostrom argues that superintelligence would likely be unstoppable to humanity (i.e., prepotent), his arguments for this claim (e.g., the “instrumental convergence thesis”) seem predicated on AI systems approximating some form of rational agency, and this report aims to deemphasize such unnecessary assumptions. It seems more prudent not to use the notion of superintelligence as a starting point for concern, and to instead focus on more specific sets of capabilities that present “minimum viable existential risks”, such as technological autonomy, high replication speed, or social acumen.2

The takeaway here, I think, is that we don’t need to wait for superintelligence to potentially have a viable existential threat from AI and that prepotence is a potentially more useful property to consider than superintelligence in analyzing existential risks. After all, a superintelligent system might very well be prepotent and arguably would only constitute an existential risk if it were. (Is non-prepotent superintelligence even a meaningful concept, and if it is, could a non-prepotent superintelligence still constitute an existential risk?)

Misaligned/Unsurvivable Prepotent AI

The connection between the property of prepotence and the potential existential risks that systems or technologies having this property might represent is illuminated by considering what the authors term misaligned prepotent AI, or MPAI. Excepting the aforementioned difficulties in defining “alignment” for multi-stakeholder systems, a threshold needs to be defined for what it means for prepotent AI to be misaligned, and ARCHES takes this to be the threshold of survivability for humanity, much like the report took “existential risks” to actually imply extinction:

MPAI. We say that a prepotent AI system is misaligned if it is unsurvivable (to humanity), i.e., its deployment would bring about conditions under which the human species is unable to survive. Since any unsurvivable AI system is automatically prepotent, misaligned prepotent AI (MPAI) technology and unsurvivable AI technology are equivalent categories as defined here.2

A naturally important question to consider when addressing the potential dangers of prepotence is how likely a prepotent AI is to be unsurvivable. We can be certain that there is some chance of a prepotent AI technology leading to humanity’s extinction. By definition, the deployment of a prepotent AI technology brings about large and irreversible changes to the Earth, and considering that the physical conditions necessary for human survival are highly specific, it is clear that a great many of the changes to the environment that could potentially be made by a prepotent AI would render it uninhabitable. Further, while any one of these unsurvivable environmental changes might be unlikely to occur, the likelihood that some unsurvivable environmental change would occur as a result of the deployment of a prepotent AI technology is much higher. Perhaps humans could avoid these outcomes by developing provably safe prepotent AI technology, but this might be unnecessarily more risky than just building beneficial non-prepotent AI systems (which we could decide to stop if needed) instead. In ARCHES, this notion is encapsulated in the Human Fragility Argument:

The Human Fragility Argument. Most potential future states of the Earth are unsurvivable to humanity. Therefore, deploying a prepotent AI system absent any effort to render it safe to humanity is likely to realize a future state which is unsurvivable. Increasing the amount and quality of coordinated effort to render such a system safe would decrease the risk of unsurvivability. However, absent a rigorous theory of global human safety, it is difficult to ascertain the level of risk presented by any particular system, or how much risk could be eliminated with additional safety efforts.2

As Andrew explains in the podcast, “I think it’s pretty simple. I think human frailty [sic] implies don’t make prepotent AI. If we lose control of the knobs, we’re at risk of the knobs getting set wrong… So that’s why some people talk about the AI control problem, which is different I claim than the AI alignment problem. Even for a single powerful system, you can imagine it looking after you, but not letting you control it.”1 This argument makes intuitive sense to me; we shouldn’t build prepotent AI systems or technology because doing so would definitionally constitute a loss of control, even if it would not necessarily cause extinction.

Conclusion

In their paper “AI Research Considerations for Human Existential Safety (ARCHES),” Andrew Critch and David Krueger introduce the concepts of delegation and prepotence in order to begin to more meaningfully discuss existential risks associated with the development of artificial intelligence. I particularly appreciated Andrew’s discussion of this paper on the Future of Life Institute podcast for prodding me to question some of my assumptions about the relationship between the concepts of alignment and existential safety. For me, both the paper and the podcast highlighted the need for adopting more precise terminology in discussing these issues, and I think their presentation of the concepts of delegation and prepotence represent first steps in this direction.

On a final note, ARCHES is filled with many other gems of insight that I did not have space to mention here. I highly recommend reading the whole report and making your own assessment of these ideas.

Future of Life Institute, Andrew Critch on AI Research Considerations for Human Existential Safety ↩ ↩2 ↩3 ↩4 ↩5 ↩6 ↩7

Andrew Critch and David Krueger, AI Research Considerations for Human Existential Safety (ARCHES) ↩ ↩2 ↩3 ↩4 ↩5 ↩6 ↩7 ↩8 ↩9 ↩10 ↩11 ↩12 ↩13 ↩14 ↩15 ↩16 ↩17 ↩18

Dario Amodei et al., Concrete Problems in AI Safety ↩

Stuart Russell, Daniel Dewey, Max Tegmark, Research Priorities for Robust and Beneficial Artificial Intelligence ↩

Jack Koch, The Need for ML Safety Researchers ↩

Nick Bostrom, Existential Risks ↩

Paul Christiano, Clarifying AI Alignment ↩

Open Philanthropy, Some Background on Our Views Regarding Advanced Artificial Intelligence ↩